Model Labels¶

import pandas as pd

from resistance_ai import decode_reality

model_outputs = pd.read_csv("Labels.csv")

decode_reality.visualize(model_outputs)

decode_reality.explain(model_outputs)

All models are wrong, some models are useful3. The problem at hand informs our choice of model targets (what to consider as ‘true’ predictions). In most settings, we use the predictions made by human predecessors as ground truth.

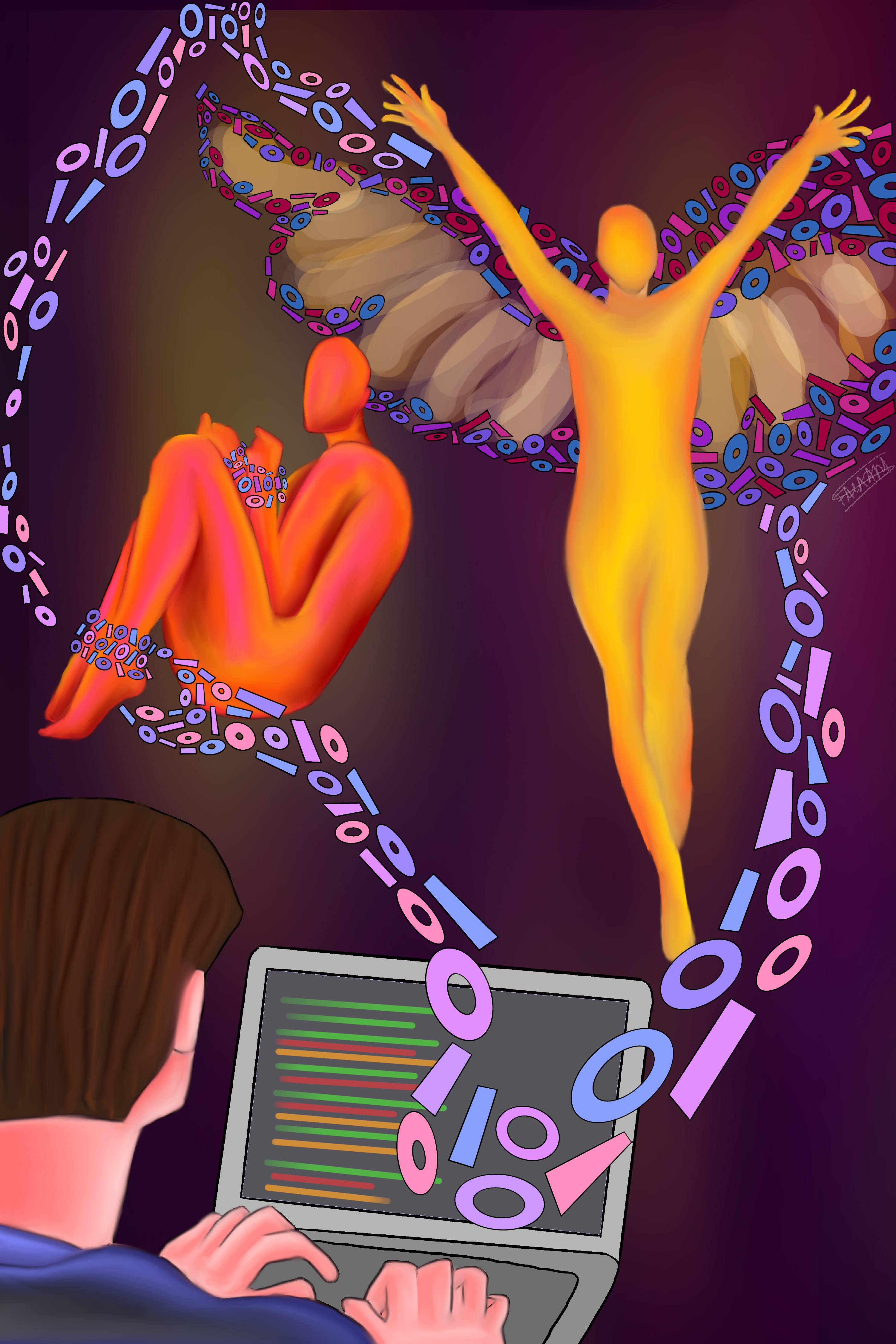

But, human decisions are inherently subjective! In most tasks, model targets are just proxies for underlying social phenomena. And so, we go about trying to quantify the qualitative7, enforcing ‘objective’ mathematical formalizations of social constructs that are inherently fluid.

Take ‘gender prediction’ for example5. Gender is a social construct. Historically, gender has been reduced to sex and models trained to predict gender try to learn what a ‘woman’ looks like, based off of pictures of people labelled as ‘female’.

What happens to people who do not conform with stereotypical gender binaries? To be represented in the data, those at the fringes of society are forced to give up their identity and be pushed into one of the ‘acceptable’ predetermined classes8. On the other hand, those in power get to dictate the dominant social narrative and, by construction, get a rich representation in data. In a society that identifies people with short hair and masculine features as ‘men’, algorithms will also determine people’s genders using the same reductive notions.

In our hasty selection of model labels, we shoot the arrow of ‘objectivity’ on to an infinitely complex social landscape. As a result, those who do not neatly align with the prevalent stereotypes fall through the cracks.