Model Inputs¶

import pandas as pd

from resistance_ai import decode_reality

model_inputs = pd.read_csv("Data.csv")

decode_reality.visualize(model_inputs)

decode_reality.explain(model_inputs)

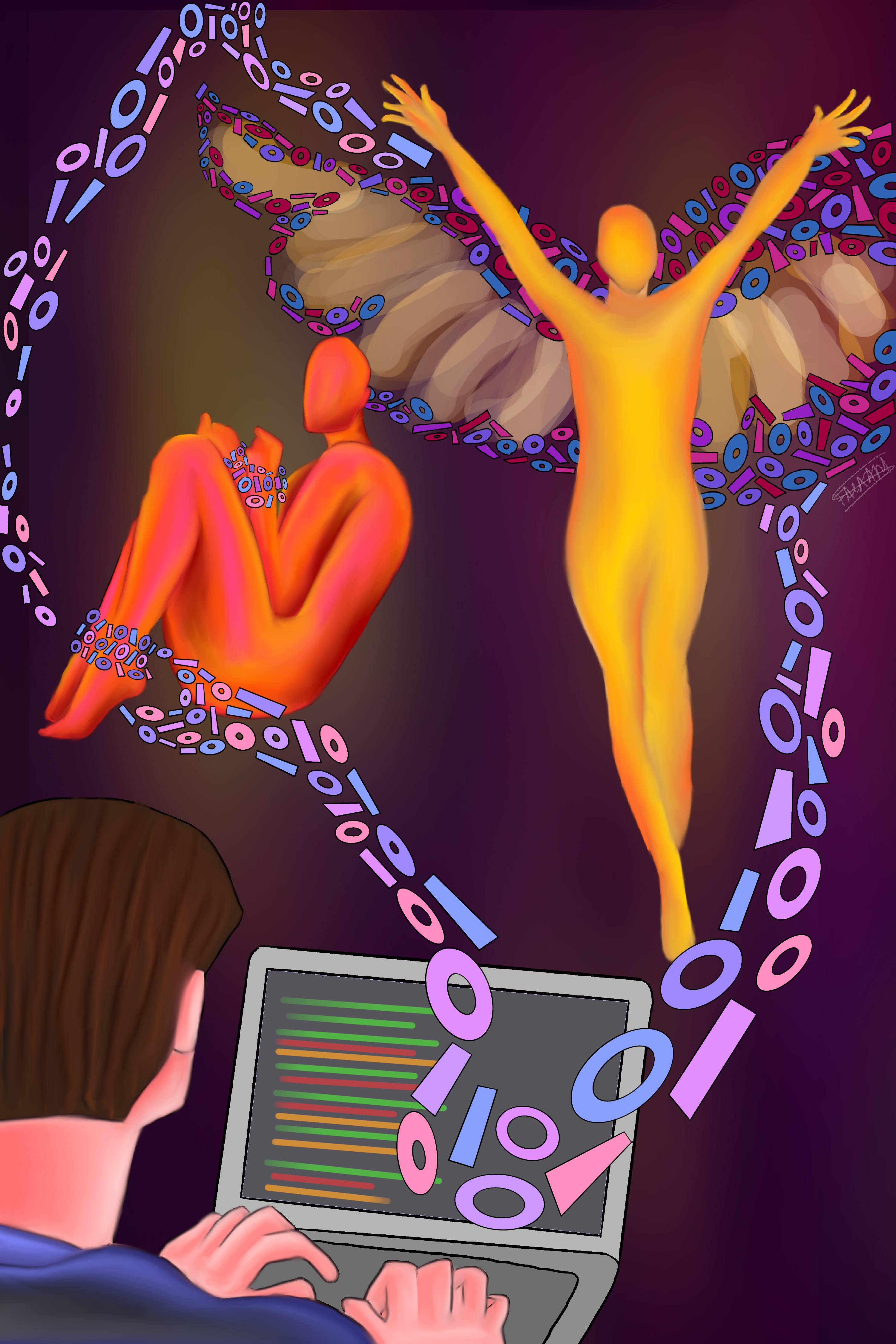

Data is for the taking. No matter who, no matter how private or personal the data in question.

Despite there being sufficient scholarship documenting the harm of existing approaches (such as Facial Recognition5), the same old adage of ‘Progress and Innovation’ is touted and used to justify the obsession with creating technological solutions to societal problems. Self-affirming ‘Fairness’ and ‘Ethics’ certificates are stamped onto data collection strategies, while the ground reality remains discriminatory and predatory.

A data point might just look like a bunch of numbers loaded in a Data frame, but the irrevocable truth is that it was collected by a power-wielding entity, about a living-breathing human being, in a socio-techno-political context6.

Datasets aren’t created out of thin air. Digital artifacts live well beyond their intended shelf life. We need to stop abstracting away the Ethics of Data and start taking responsibility for validating the efficacy of the data we are using—whether we collected it ourselves or took it off someone else’s shelf.