Model Deployment¶

from model_training import model

from model_deployment import real_world

from resistance_ai import decode_reality

real_world_data = real_world.extract()

predictions = model.evaluate(real_world)

decode_reality.visualize(predictions)

decode_reality.explain(predictions)

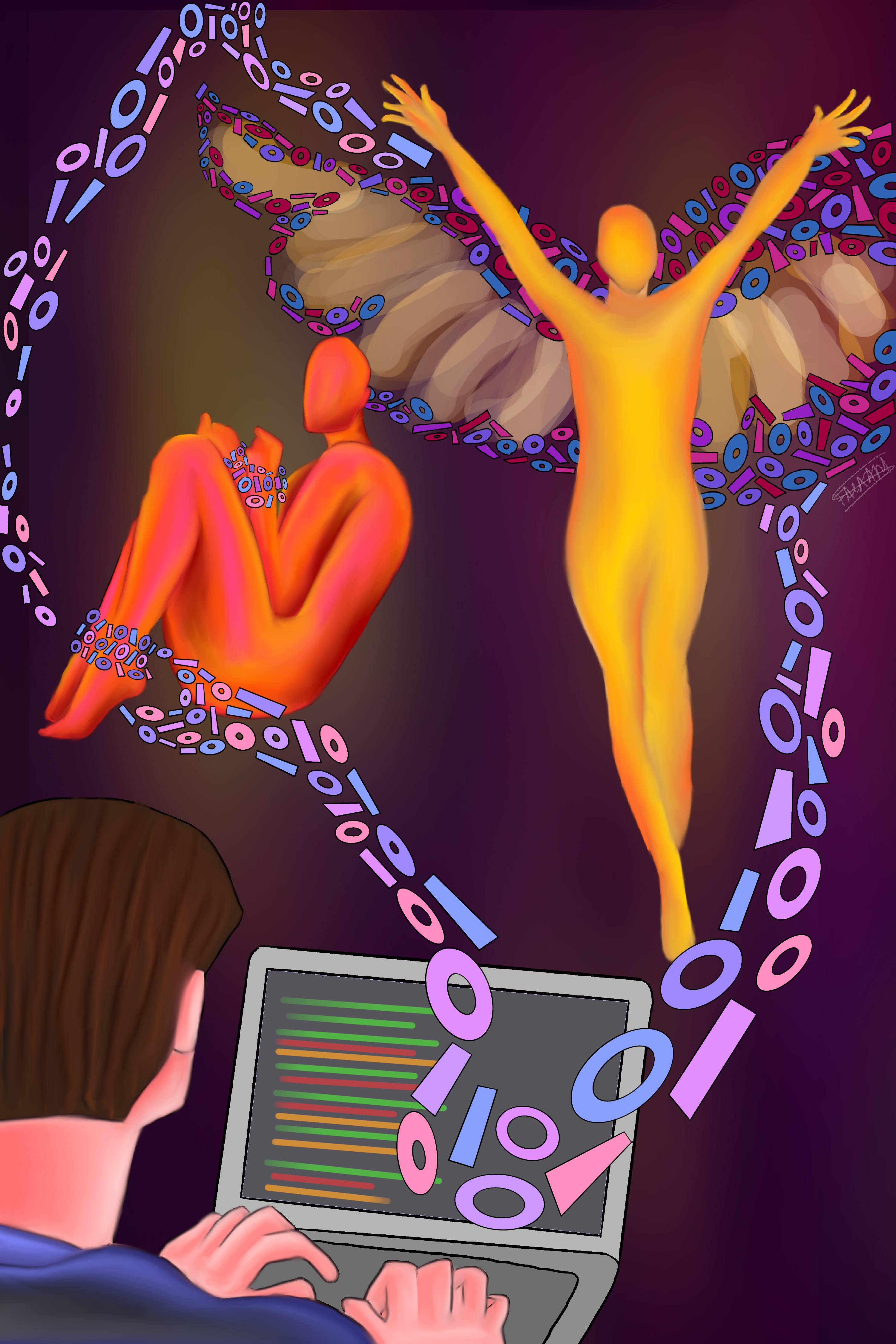

Remember those spurious class labels? The ones that did not represent the ground truth and were merely proxies for infinitely more complicated social phenomena. What do you think happens when data-driven algorithms get deployed back into the real world? The groups whose identities were erased while determining class labels are further oppressed by algorithmic decision making systems that enforce reductive categorizations in the real world.

For example, take the notoriously biased Google Search9, which reflects social inequalities in it’s misleading and at times derogatory results on minority groups, such as Women of Color, while painting historically powerful demographics such as white cis-men in a positive light.

Outputs from algorithms are used to make decisions about everything under the sun and reach well beyond the scope of the prediction task itself. Culture, values, judgements, popular opinion and a whole plethora of social constructs are now shaped by technology.8 In order to survive, the marginalized have to choose between oppression or erasure.

If you think building AI is a purely engineering/math problem, that has nothing to do with Social hierarchies and Power dynamics, THINK AGAIN.